It’s a bit of a challenge to keep up with all the changes, updates and all the new stuff coming out for Fabric. As I’m not really invested in the PowerBI part of the data platform (yay pie charts ;)), some things that are very common for the PowerBI community are very new to me. I have it on good authority that this blog covers a feature that is well know within PowerBI but quite new in the data engineering part. When I say that, I need to add that at the time of writing, only the PowerBI side of things are fully supported but I have very good hopes that pipelines and notebooks will be supported as well.

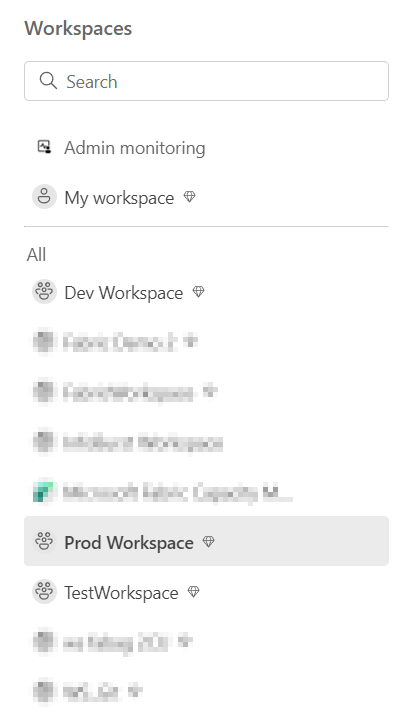

Workspaces

In Fabric, you have the option to create multiple workspaces; think of them as Dev, Test and Prod.

When you’ve developed something really cool in Dev that’s ready for production, you might want it tested before. I know, we don’t make mistakes and every line of code is perfect but in the unlikely event that something is awry, let’s make sure it’s caught in test.

Git and Pipelines

The ancient way is to copy and paste all the code, hope you got the settings correct and go for it. I hope you’ve seen that there are better options, like Git or Azure DevOps. One of the things that comes with them is a way of deploying resources.

Github Actions, Azure DevOps pipelines or deployment pipelines all try to achieve one goal; a structured way of working with CI/CD (Continuous Integration / Continuous Deployment). This doesn’t mean that your deployment runs continuously though. What it tries to achieve is, in my experience, a controlled way to develop code, test it and deploy only validated and working code into production. This way, production should never break with new releases and code only reaches production after reviews and testing.

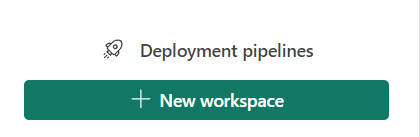

Now, when you look in the overview of the workspaces, there’s this option:

Besides creating new workspaces, you can create a deployment pipeline. Remember that this is something different that a copy data pipeline.

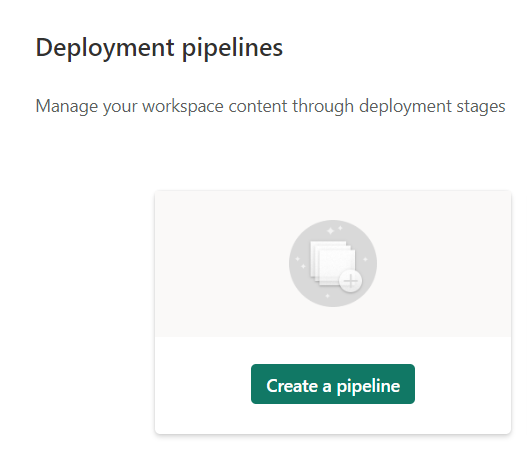

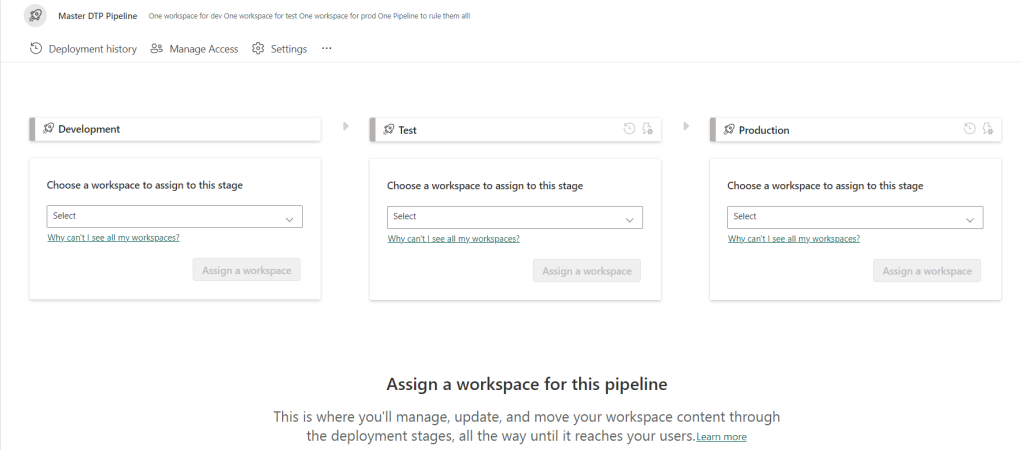

When you click on it, you’ll see this screen:

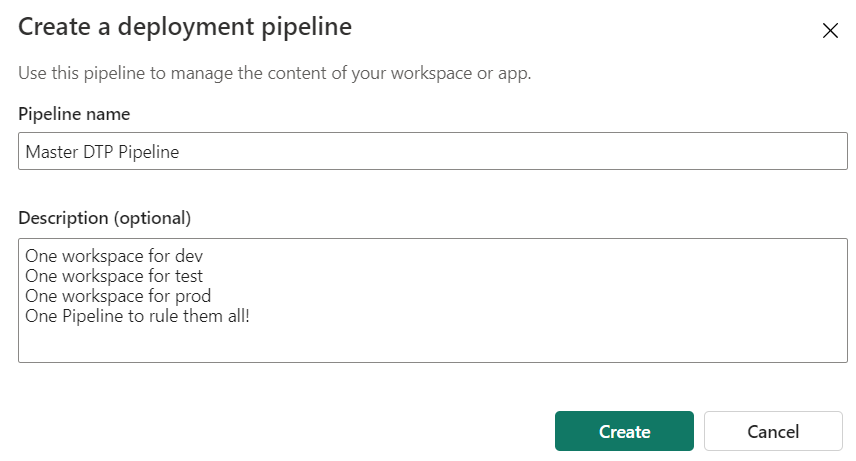

Next you need to give the pipeline a name:

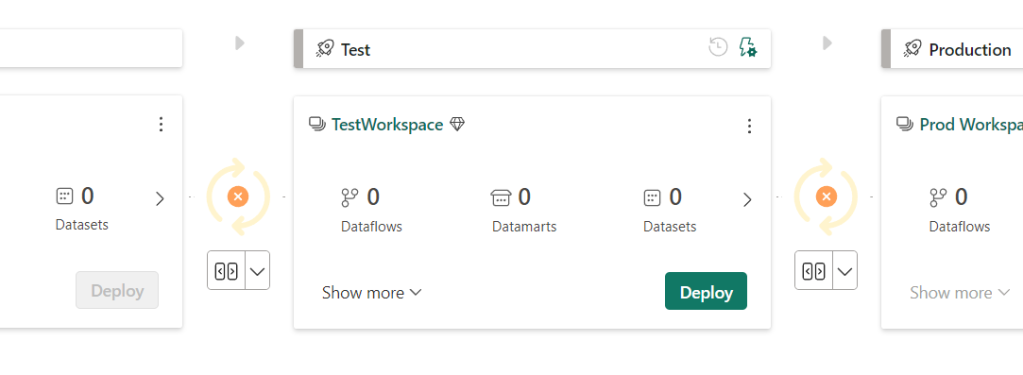

When you’ve created it, you get the option to join the workspaces to the pipeline stages or steps or however you wish to call them.

Select the workspace and then assign. Every workspace will be checked on items and they will show up in the list.

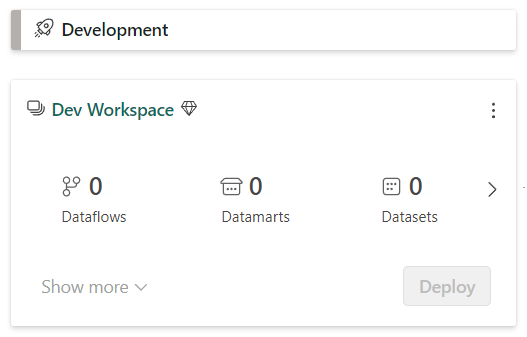

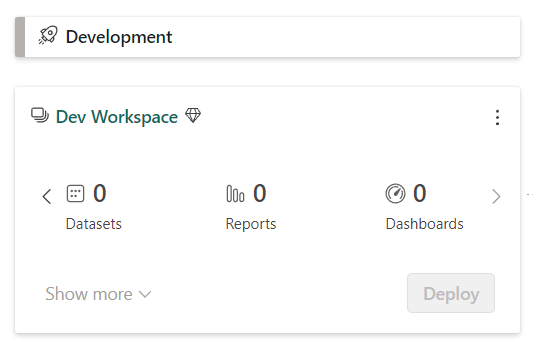

Right now, this workspace is empty. I’ll create a copy data pipeline and see what happens next.

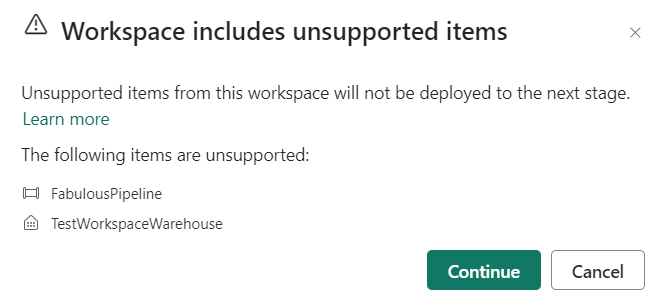

Unsupported artefacts

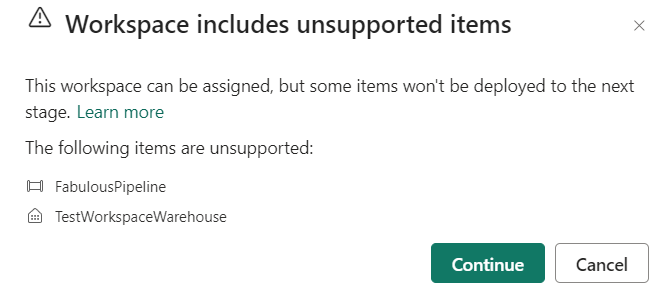

As you can see from the screenshot above, a pipeline isn’t supported and doesn’t show up. When you unassign a workspace and reconnect it, you’ll get a warning for this:

Hopefully more features will be added soon. You can read the complete list here.

Supported artefact (report)

To show what happens when a supported item is created, I’ve let Fabric create a report for me. A really amazeballs fabulous one. Without a pie chart even!

Now lets see what happens. Like most of us, we like to develop in production and to get rid of that habit we need to take small steps to the left. So, my report and other things are developed in test. Thank you, I’m proud of me as well.

But the pipeline is brutally honest, pointing out the differences automatically. I didn’t have to refresh or do anything manually.

As you can see, there’s a button to show the changes:

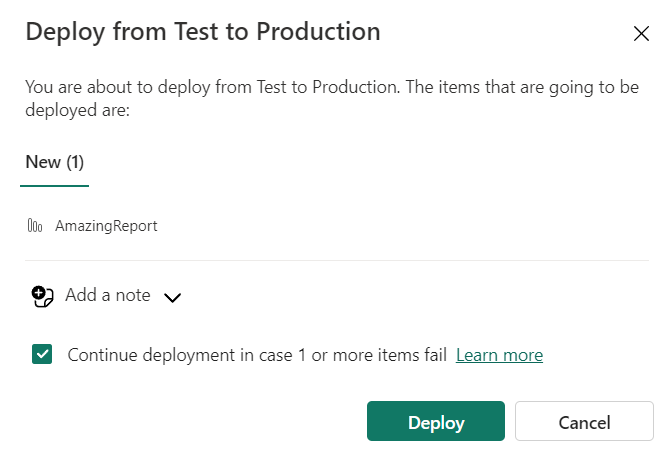

The green deploy button allows me to deploy the report to the dev environment, let’s do that!

OK, technically it’s a previous stage and not the next one but let’s not nitpick.

A note can be added to link it to work items, or describe why the artefact is promoted. But I wanted to deploy to Dev, not to production. At least, not yet.

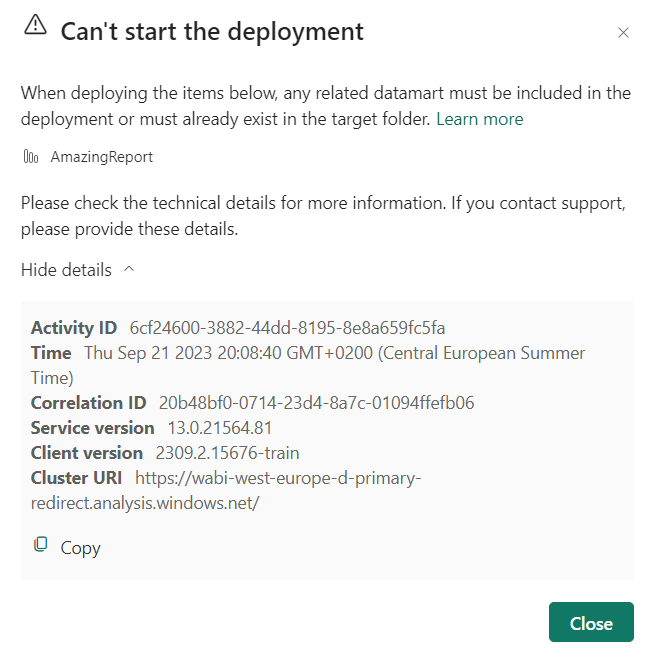

This one rightfully fails. In a way I’m happy this happens as the dataset isn’t available in either Dev or Prod. If the report would have been deployed, all that would have happened was errors on opening the report. It’s good that the checks are strict.

It also shows that all the items should be supported so all the changes can be deployed in a controlled way.

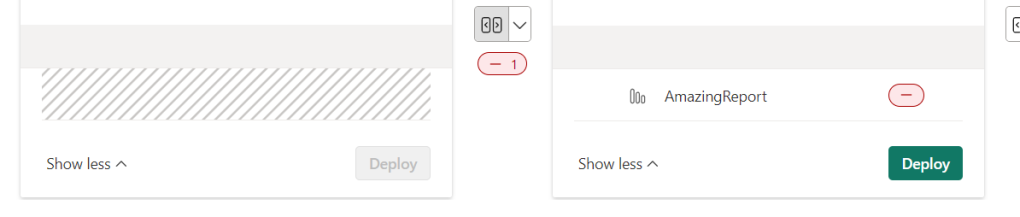

Filter artefacts to deploy

Suppose you have more artefacts in your workspace, are they all deployed no matter what?

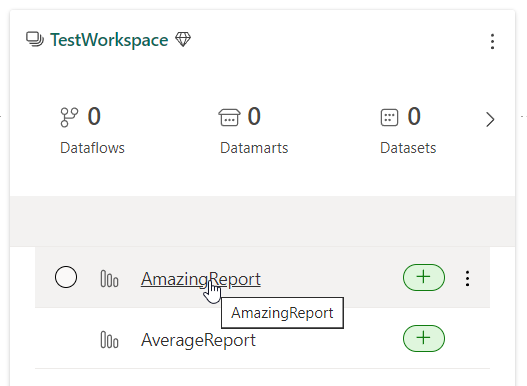

No, take a look at this:

As you can see, at the left of my AmazingReport, there’s a checkbox. Click it and the report will be deployed. Leave everything unchecked and all the artefacts will be deployed. So you can easily deploy them all or make a selection.

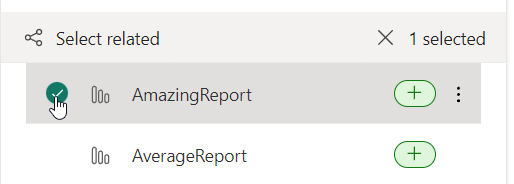

When you select an artefact and you’re not sure if there are other dependent items, there’s this option:

Click on the Select related button at the top left of this screenshot and the related items (if supported of course) will be added to your deployment. And that makes it more failsafe to deploy from test to prod!

My opinion on the current state

The idea is amazing and has a lot of potential. When all the artefacts in a workspace are supported AND you can deploy downstream as well as upstream between development and test, it will be another amazing addition to the Fabric toolbox.

However, I can imagine that deployments will only be possible in an upstream manner as this is the way it’s meant to be. I get that but the honest reality is that developments take place in production and sometimes need to be promoted to Test or Dev. Also at times you might want to sync Test or Dev with the current Prod environment to make sure development and tests are done to the correct configuration of artefacts.

If you agree with this argument, please support my idea on ideas site.

Thanks for reading and happy deploying!

Thanks for sharing your view on deployment pipelines. I usually use them regularly but are uncertain which approach works best in regards of the missing option to deploy data pipelines to the next environment. Currently I save the data pipeline in the other environment and locate the respective destinations via variables – is there any better solution for the still existing issue that data pipelines are not supported?

LikeLike