Or maybe I should say, with some complications. Or challenges.

When I got the job to restore a bacpac file, I didn’t think much of it. Because it’s quite easy. Get the bacpac and restore it with Azure Data Studio or Sql Server Management Studio. Get coffee, done. Then I got these requirements:

- The bacpac needs to be downloaded daily from an sFTP server, without certificate

- The bacpac file is in a zip file

- The zip file is password protected

- Everything must be done serverless (Azure Automation, Logic App, Function App, Data Factory and/or Synapse Analytics)

- It’s a daily process that needs to be done without human supervision

Now dear reader, we need to jump through hoops. Because a password protected zipfile can be a (very) good idea, but doesn’t exactly make life easy.

My first instinct was to Powershell all the way. That sort of works but has serious issues with unzipping password protected files. And yes, the 7Zip for Powershell module was found but not working in a Function App.

SoI had to use most of the things I know about Azure and learn a new language to get it all done.

Before I dig into the solution, some things I learned along the way.

Automation and Apps

They are quite cool, can do a lot of stuff but are less fun when debugging. Why? Debugging Azure Automation means queueing the job, starting the job and then executing it. Count yourself lucky if actually starting your automation job takes less than a minute. And, you won’t see anything happen until the proces is done. Then you’ll get the output, in my case a sea of red, angry messages.

Running a job in a Function app will return a result, but getting the details of the run can take up to 5 minutes for the logging service to display. That’s not really fun if you see something going wrong but you need the error quick. Depending on the language you’ve chosen for your function app, you can or cannot develop code in the portal. PowerShell is fine, Python isn’t.

You can only connect your function app’s to Github, Azure DevOps isn’t an option funnily enough.

The only service that is really fast with displaying results (good or bad) are the logic apps. All you have to do (and that can be a challenge in itself) is find the right connector for your task. The logic apps are quite straightforward. They’re not really very flexible but very good at what they are supposed to do.

Running PowerShell in a function app is very different and has to many limitations if you’re not in the enterprise version to really work (for me that was). Running Python was fine though.

Tips.

If you’re developing your code, use a VM to iron out most of the wrinkles before you deploy your code into serverless options. It will save you a lot of time and frustration. Make sure the VM is in the correct subscription and virtual network. Depending on the service you’re planning to use, do or do not use a managed identity.

Either create a Github account for your code to save it from ‘accidents’, or manually store it in Azure DevOps. Funnily enough, these resources lack DevOps integration.

Language!

No, not my bad language when things didn’t go my way but the different options. The function apps have a few on offer and that gives it enough flexibility to fit most use cases. For my solution I got away with PowerShell in the Automation and Python in the Logic App.

Logic apps support C# (.NET), JavaScript (Node.js), Python, Java, Powershell (Core), TypeScript (Node.js) and other languages using custom handlers.

Azure Automation supports PowerShell and Python. There are some details there as well, look them up here.

Network integration

Well, there’s some room for improvement there. Because unless you start shelling out, network integration just isn’t there. What you do get with function apps is a long list of IP addresses used by the service. Guess what a supplier is going to say when I give them 20-ish different ones.

Private endpoints are quite the same, only the premium priced stuff get private endpoints. Which is a bit expensive if the use case is to just do one job once a day.

Logic Apps: No network integration

Function Apps: Only integration in Premium stuff

Azure Automation: Private Endpoints available, even in basic

If you want to connect any of these services to a public IP address, you might want to read this link and think of your best option.

People

Knowing people is key. I’m not well versed in Python, no really not! So I found a script online that could unzip a database for me. And didn’t work. Luckily one of our new coworkers (trainee) is a very smart lady (Astronomy graduate) and she knows Python. And she helped me out by rewriting the code.Without her, I’d still be wondering about weird error messages about HTTP requests or missing files.

Solution

So, what did you build then?

Well, I’m using Azure Automation as the primary point to run everything in, just because a Function App has a time-out of 9 minutes and 59 seconds. After that, the process gets rebooted. And everything did take more time than that.

The AA job first triggers the logic app that downloads the ZIP file to a storage account. When the download is done, the AA job triggers the Python Function App to extract the data from the password protected zipfile and copy the data to a date folder structure (year/month/day). The Python script is variable driven, so filename, foldernames and password are sent in as a variable. The sensitive password is retrieved from the Key Vault so no sensitive data in any scripts. In the code below I’ve removed the hardcoded stuff and replaced it with <> brackets to draw your, and the debuggers, attention. To be clear, it’s all compiled from code found on the internets, so no proprietary stuff there.

After extracting the bacpac file, the AA job starts by dropping the existing database because you’re not allowed to overwrite it. It then restores the DB and when completed, sends an ‘all good’ signal. This is done because in the end Data Factory is going to orchestrate the process. After a restore, Data Factory can do it’s job and the end users can get the data to refresh their reports.

Show me the money! I mean code! Show me the Code!

Ok, calm down.

Here’s the Azure Automation stuff, all PowerShell.

#Login to Azure using your managed identity

Connect-AzAccount -Identity

# Set the Azure context to the correct subscription

Set-AzContext -Subscription '<choose yours>'

#Get the Key Vault Secrets (make sure your identity has Key Vault Secrets User rights assigned

$pass = Get-AzKeyVaultSecret -VaultName "<just the keyvault name>" -Name "<secret name>" -AsPlainText

$StorageToken = Get-AzKeyVaultSecret -VaultName "<just the keyvault name>" -Name "<SecretName>" -AsPlainText

$SqlPw = Get-AzKeyVaultSecret -VaultName "<just the keyvault name>" -Name "<Secret Name>" -AsPlainText

#Invoke the Logic App

Invoke-WebRequest -Uri '<Your Logic App Link>' -Method POST

#Define the target path in your datalake

$targetPath = '/FolderName/' + (Get-Date -Format "yyyy") + '/' + (Get-Date -Format "MM") + '/' + (Get-Date -Format "dd") + '/'

$storageUri = 'https://<StorageName>.blob.core.windows.net/datalake' + $targetPath + '/TargetFileName'

#Parameters for the Python Function App

$Parameters= @{

fileName = '<SourceFileName>'

folderFile = '<SourceFolderName>'

password = $pass

folderPath = $targetPath

connString = $StorageToken

} | ConvertTo-Json -Compress

Invoke-WebRequest -Uri '<Your Function App Link>' -Method POST -Body $Parameters -Headers @{'Content-Type' = 'application/json; charset=utf-8'}

# Drop the existing database to allow for restore

Remove-AzSqlDatabase -ResourceGroupName "<Your Resource Group>" -ServerName "<Your servername, not the FQDN!>" -DatabaseName "<Your Database name>"

#Restore the Database

$importRequest = New-AzSqlDatabaseImport `

-ResourceGroupName "<Your Resource Group>" `

-ServerName "<Your servername, not the FQDN!>" `

-DatabaseName "<Your Database name>" `

-DatabaseMaxSizeBytes "2147483648" ` #2GB

-StorageKeyType "StorageAccessKey" `

-StorageKey $(Get-AzStorageAccountKey -ResourceGroupName "<Your Resource Group Name>" -StorageAccountName "<Your Storage account name, not the FQDN!>" ).Value[0] `

-StorageUri $storageUri `

-Edition "Standard" `

-ServiceObjectiveName "S6" `

-AdministratorLogin "<Your SQL Admin Login name, use Cloud... if not configured during deployment>" `

-AdministratorLoginPassword $(ConvertTo-SecureString -String $SqlPw -AsPlainText -Force)

#Print the status, handy for debugging

$importStatus = Get-AzSqlDatabaseImportExportStatus -OperationStatusLink $importRequest.OperationStatusLink

Write-Host "Import started, let's get the status."

while ($importStatus.Status -eq "InProgress") {

$importStatus = Get-AzSqlDatabaseImportExportStatus -OperationStatusLink $importRequest.OperationStatusLink

Write-Host "status: " $importStatus.StatusMessage

Start-Sleep -s 30

}

Write-Host "Done, let's get the final import status: " $importStatus

#Save money, reset database to much lower SKU

Write-Host "Reset Database to S0"

Set-AzureSqlDatabase -ServerName "<Your servername, not the FQDN!>" –Database "<DatabaseName>" -ServiceObjective "S0" -Edition "Standard" -Force

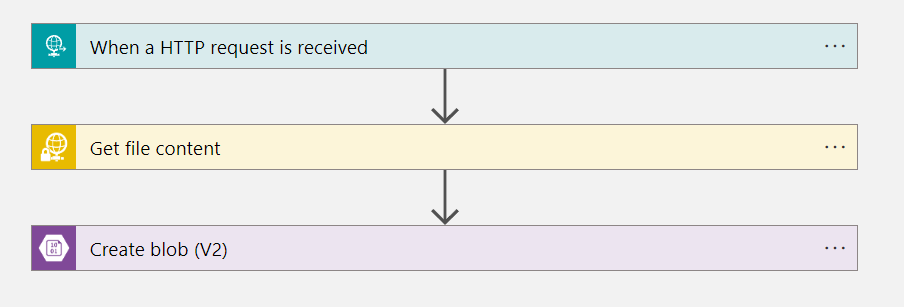

This is the lay-out of the Logic App: Get the file and write it to the Blob storage (also known as my datalake but sshhhh ;))

Get the data from the password protected zipfile and write it to the correct folder. This is all in Python.

import azure.functions as func

import uuid

import os

import shutil

from azure.storage.blob import ContainerClient

from zipfile import ZipFile

import logging

app = func.FunctionApp(http_auth_level=func.AuthLevel.FUNCTION)

@app.route(route="sqlDbUnzip")

def sqlDbUnzip(req: func.HttpRequest) -> func.HttpResponse:

logging.info('Python HTTP trigger function processed a request.')

try:

reqBody = req.get_json()

fileName = reqBody['fileName']

folderFile = reqBody['folderFile']

zipPass = reqBody['password']

folderPath = reqBody['folderPath']

connString = reqBody['connString']

storageAccountConnstr = connString

container = '<ContainerName>'

# Define local temp path, on Azure, the path is recommended under /home

tempPathRoot = "/home/temp/"

unZipTempPathRoot = "/home/unZipTemp/"

unZipTempPath = unZipTempPathRoot + str(uuid.uuid4())

os.makedirs(tempPathRoot, exist_ok=True)

os.makedirs(unZipTempPath, exist_ok=True)

container_client = ContainerClient.from_connection_string(storageAccountConnstr, container)

logging.info("Downloading zip file")

zipFilePath = tempPathRoot + fileName

with open(zipFilePath, "wb") as my_blob:

try:

download_stream = container_client.get_blob_client(folderFile).download_blob()

my_blob.write(download_stream.readall())

except:

logging.info("Error downloading zip file")

else:

logging.info("file downloaded successfully")

logging.info("Unzipping to temp folder")

with ZipFile(zipFilePath) as zf:

zf.extractall(path=unZipTempPath, pwd=bytes(zipPass, 'utf-8'))

logging.info("Uploading all files in temp folder")

for root, dirs, files in os.walk(unZipTempPath):

for file in files:

filePath = os.path.join(root, file)

destBlobClient = container_client.get_blob_client(filePath.replace(unZipTempPath, folderPath))

with open(filePath, "rb") as data:

destBlobClient.upload_blob(data, overwrite=True)

except Exception as e:

logging.error(f"An error occurred: {e}")

return func.HttpResponse(f"An error occurred: {e}", status_code=500)

finally:

logging.info("Removing all temp files")

shutil.rmtree(unZipTempPath, ignore_errors=True)

os.remove(zipFilePath)

return func.HttpResponse("Process completed successfully", status_code=200)

# https://stackoverflow.com/questions/65277324/unzip-password-protected-zip-file-automatically-from-azure-storage

If this code fails at first, make sure you add these lines to the requirements file:

Some advice, take your time reading the script and understanding what it does. Also, familiarise yourself on how to create Python Logic Apps, there’s some special stuff going on there. This link will help you on your way.

Should you get the message on deployment that HTTP triggers could not be found, this could have something to do with your trigger, but it’s way more likely that you have an error in your code that the IDE isn’t showing. That one cost me around 6 hours to find out…

Easier ways?

Maybe everything could have been built in Python. Or maybe there are PowerShell modules to make life easier. On the other hand, it was a very nice way to get some hands on experience with the different Azure services and their ‘challenges’. In any case, if you’ve read this far this might have helped you out for which I’m grateful.

Thanks for reading!

One thought on “Restoring a bacpac file, but differently.”